The digital universe expands at an astonishing rate, demanding an infrastructure capable of handling unprecedented volumes of data and processing power. At the heart of this expansion lie hyperscale data centers – colossal facilities designed for massive scalability, efficiency, and resilience. Far more than just large server farms, these behemoths are the foundational pillars of the modern digital economy, powering everything from our social media interactions and streaming entertainment to complex scientific simulations and the burgeoning world of artificial intelligence. Understanding hyperscale is crucial to grasping the future of technology, as they are not merely accommodating growth; they are actively shaping it.

What Defines a Hyperscale Data Center?

Distinguishing a hyperscale data center from a traditional enterprise data center involves several key characteristics, primarily revolving around scale, design, and operational philosophy.

A. Unprecedented Scale: Hyperscale facilities are, by definition, enormous. They typically house hundreds of thousands, if not millions, of servers, spanning vast areas – often equivalent to multiple football fields. This immense scale allows them to manage the computational demands of global services with millions or even billions of users, such as those offered by tech giants like Google, Amazon, Microsoft, and Meta. This sheer size enables economies of scale that are simply unattainable by smaller operations.

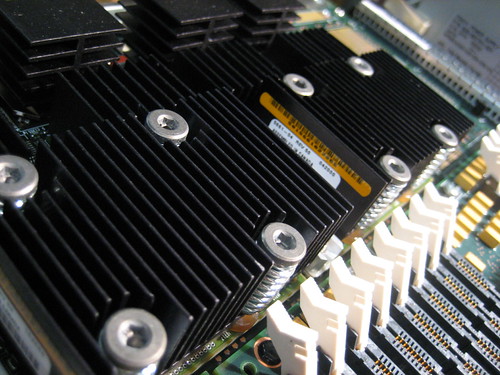

B. Modular and Scalable Design: Rather than monolithic structures, hyperscale data centers are built with modularity in mind. They often consist of repeatable blocks or pods of servers, storage, and networking equipment that can be rapidly deployed and interconnected. This modularity ensures that as demand grows, capacity can be added swiftly and efficiently, minimizing downtime and optimizing resource utilization. The “building block” approach facilitates rapid expansion without requiring a complete redesign of the facility.

C. Optimized for Specific Workloads: While traditional data centers might host a diverse range of applications, hyperscale facilities are meticulously engineered and optimized for the specific workloads of their operators. This specialization allows for extreme efficiencies in power consumption, cooling, and hardware selection. For instance, a hyperscale data center primarily serving AI model training will have a different hardware configuration and cooling strategy than one focused on web serving or cloud storage. This tailored approach maximizes performance and minimizes operational expenditure.

D. High Level of Automation: The sheer number of components within a hyperscale data center makes manual management impractical, if not impossible. Consequently, automation is paramount. Everything from server provisioning and software deployment to power management, cooling optimization, and fault detection is highly automated. This reliance on sophisticated orchestration software reduces human error, increases operational efficiency, and allows for rapid responses to changing conditions or failures. Automation is the engine that allows these vast infrastructures to run with minimal human intervention.

E. Exceptional Redundancy and Resilience: Given their critical role in supporting global services, hyperscale data centers are designed with extreme levels of redundancy at every layer – power, cooling, networking, and compute. Multiple power feeds, redundant cooling systems, and distributed architectures ensure that even significant component failures do not disrupt service. This “fault tolerance by design” is crucial for maintaining the always-on availability demanded by modern digital consumers.

F. Proprietary Hardware and Software: To achieve optimal performance and efficiency, many hyperscale operators design and build their own custom hardware and software. This includes everything from bespoke server designs that prioritize power efficiency and specific component layouts to highly optimized operating systems and data center management software. This vertical integration allows for a level of fine-tuning that off-the-shelf solutions simply cannot match, giving them a significant competitive advantage.

The Driving Forces Behind Hyperscale Growth

The explosive growth of hyperscale data centers is not accidental; it is a direct response to several powerful technological and economic trends.

A. The Cloud Computing Revolution: Perhaps the single biggest catalyst for hyperscale expansion is the ubiquitous adoption of cloud computing. Services like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are built entirely on hyperscale infrastructure. As more businesses and individuals migrate their data and applications to the cloud, the demand for underlying hyperscale capacity surges. The elasticity and on-demand nature of cloud services fundamentally rely on the vast, scalable resources provided by hyperscale data centers.

B. Big Data and Analytics: The generation of data has surpassed all previous predictions. Every click, every search, every sensor reading contributes to an ever-growing ocean of information. Analyzing this big data to extract valuable insights requires immense computational power and storage, precisely what hyperscale facilities offer. From customer behavior analysis to scientific research, the ability to process and store petabytes (and soon exabytes) of data is a core function of these centers.

C. Artificial Intelligence (AI) and Machine Learning (ML): AI and ML workloads, particularly the training of deep learning models, are incredibly compute-intensive. They demand specialized hardware like GPUs and high-bandwidth interconnects, making hyperscale environments ideal. The ability to deploy thousands of accelerators in parallel within a controlled, optimized environment accelerates the development and deployment of advanced AI applications, driving innovation across countless industries.

D. Internet of Things (IoT): The proliferation of interconnected devices, from smart home appliances to industrial sensors, generates continuous streams of data. While some processing occurs at the edge (closer to the data source), much of this data needs to be aggregated, processed, and stored in central repositories – often within hyperscale data centers – for deeper analysis and long-term retention.

E. 5G Network Deployment: The rollout of 5G networks promises unprecedented speed and lower latency, unlocking new possibilities for applications like autonomous vehicles, augmented reality, and real-time industrial automation. To fully realize this potential, 5G requires a robust backend infrastructure, with hyperscale data centers serving as central hubs for data processing and content delivery, working in concert with edge computing nodes.

F. Content Delivery Networks (CDNs): Streaming video, online gaming, and rich media content dominate internet traffic. CDNs, which rely heavily on geographically distributed data centers (many of which are hyperscale or hyper-connected to hyperscale networks), ensure that content is delivered quickly and reliably to users worldwide, minimizing latency and buffering.

The Environmental Footprint and Sustainability Efforts

The immense scale of hyperscale data centers inevitably raises concerns about their environmental impact, particularly concerning energy consumption and water usage. However, leading operators are at the forefront of sustainability initiatives.

A. Energy Efficiency: Hyperscale providers are constantly innovating to reduce their Power Usage Effectiveness (PUE) – a metric that compares the total energy entering the data center to the energy actually used by the IT equipment. This includes optimizing cooling systems (e.g., using outside air for “free cooling,” liquid cooling for high-density racks), deploying energy-efficient hardware, and leveraging advanced power management techniques. Some facilities are even designed to capture and reuse waste heat.

B. Renewable Energy Sourcing: Many hyperscale operators have committed to powering their facilities with 100% renewable energy. This involves direct procurement from wind and solar farms, investing in renewable energy projects, and purchasing renewable energy credits. Their massive energy demands make them significant players in the global transition to clean energy.

C. Water Conservation: Cooling systems in data centers often consume substantial amounts of water. Operators are exploring and implementing various water-saving techniques, such as using recycled water, adopting closed-loop cooling systems, and researching alternative cooling methods that reduce or eliminate water dependency.

D. Responsible Equipment Disposal: Given the constant upgrades and refreshes of hardware, hyperscale companies are developing robust programs for the responsible recycling and disposal of electronic waste, aiming to minimize their environmental footprint throughout the equipment lifecycle.

The Future of Hyperscale: Trends and Innovations

The evolution of hyperscale data centers is far from over. Several key trends and innovations are shaping their future.

A. Increased Automation and AI for Operations: The level of automation will continue to deepen, with AI and ML increasingly used to optimize energy consumption, predict hardware failures, manage workloads, and even autonomously troubleshoot issues. This will lead to even greater efficiency and reliability.

B. Hybrid and Multi-Cloud Architectures: While hyperscale data centers are the backbone of public clouds, enterprises are increasingly adopting hybrid cloud strategies (combining on-premises infrastructure with public cloud) and multi-cloud approaches (using multiple public cloud providers). Hyperscale operators are adapting to support these complex, interconnected environments.

C. Closer Integration with Edge Computing: As discussed, the rise of IoT and real-time applications necessitates processing data closer to its source – at the edge. Hyperscale data centers will work in closer conjunction with edge computing nodes, acting as central aggregation and processing hubs for data collected at the periphery of the network. This distributed compute model will optimize latency and bandwidth.

D. Advanced Cooling Technologies: With increasing server density and the power demands of AI chips, traditional air cooling is becoming less effective. Expect to see wider adoption of advanced cooling methods like liquid cooling, including direct-to-chip and immersion cooling, which offer superior heat dissipation and energy efficiency.

E. Quantum Computing Integration (Long-Term): While still in its nascent stages, quantum computing has the potential to solve problems intractable for classical computers. Hyperscale operators are investing in research and development to explore how quantum computing resources could be integrated into their existing infrastructure, offering quantum-as-a-service to clients.

F. Software-Defined Everything (SDX): The concept of defining and managing infrastructure entirely through software will continue to mature. This allows for greater flexibility, agility, and automated provisioning of resources across compute, storage, and networking layers within hyperscale environments.

G. Security Fortification: As the central repositories for vast amounts of sensitive data, security will remain a paramount concern. Hyperscale data centers will continue to invest heavily in advanced cybersecurity measures, including sophisticated threat detection systems, encryption at every layer, and robust access controls, often leveraging AI for real-time threat intelligence.

Hyperscale data centers are more than just physical buildings; they are dynamic, intelligent ecosystems of hardware, software, and automation, constantly evolving to meet the insatiable demands of the digital age. They are the silent, powerful engines driving global connectivity, innovation, and economic growth. As technology continues its relentless march forward, these massive digital factories will remain the indispensable backbone, quietly powering the future of our interconnected world.