High Performance Computing (HPC) stands as the bedrock of modern scientific discovery and engineering innovation. Far beyond the capabilities of everyday desktop machines, HPC leverages the collective power of thousands, or even millions, of interconnected processors to tackle computational problems of immense scale and complexity. This technological prowess isn’t just about raw speed; it’s about enabling researchers to simulate phenomena, analyze vast datasets, and model intricate systems with a level of detail and accuracy previously unimaginable. From decoding the human genome to predicting climate patterns, and from designing new materials to simulating the birth of galaxies, HPC is the unseen engine propelling humanity’s quest for knowledge and progress. It provides the computational muscle to accelerate the pace of breakthroughs, transforming theoretical concepts into tangible insights and solutions that impact every facet of our lives.

The Genesis and Evolution of High Performance Computing

To truly appreciate the current impact and future potential of HPC, it’s crucial to understand its origins and the remarkable evolution it has undergone. The journey from early calculating machines to today’s petascale and exascale supercomputers is a testament to relentless human ingenuity.

A. Early Days: The Dawn of Computational Power

The concept of high-speed computation dates back to the mid-20th century. Early supercomputers, though primitive by today’s standards, were groundbreaking. Machines like the UNIVAC I (1951) and the IBM 7030 Stretch (1961) laid the foundational bricks. These were singular, massive machines, often custom-built for specific scientific or military calculations. Their speed was measured in thousands or millions of operations per second, a feat at the time, but dwarfed by contemporary processors. The focus was on raw arithmetic performance for complex, often repetitive, calculations. Early applications were primarily in fields such as cryptography, nuclear physics, and weather forecasting, where the sheer volume of numbers necessitated automated, high-speed processing. These machines were expensive, required vast amounts of power and cooling, and were accessible only to a select few government agencies and large research institutions.

B. The Vector Processing Era: Specialization for Speed

The 1970s and 80s witnessed the rise of vector processors. Companies like Cray Research, founded by Seymour Cray, became synonymous with supercomputing. Machines like the Cray-1 (1976) introduced architectures specifically designed to perform operations on entire arrays (vectors) of data with a single instruction, rather than processing each element individually. This parallelism within a single processor unit dramatically accelerated computations for scientific applications rich in linear algebra, such as fluid dynamics, structural analysis, and seismic processing. This era solidified the idea that specialized hardware could deliver performance far beyond general-purpose computers, further differentiating supercomputing as a distinct field. While still incredibly expensive, vector machines marked a significant leap in computational throughput for specific scientific workloads.

C. The Rise of Massively Parallel Processing (MPP): Distributed Powe

The late 1980s and 1990s marked a pivotal shift towards Massively Parallel Processing (MPP). The realization that linking many less expensive, off-the-shelf processors could surpass the performance of even the most powerful single vector machine spurred this revolution. Systems like the Intel Paragon and Thinking Machines CM-5 demonstrated the viability of this approach. MPP systems achieved their speed by distributing computational tasks across hundreds or thousands of independent processing nodes, each with its own memory. Communication between these nodes became a critical challenge, leading to the development of sophisticated interconnect networks and message-passing interface (MPI) standards. This era democratized supercomputing to some extent, making it accessible to a broader range of academic and industrial research labs, moving away from purely custom, monolithic designs.

D. Cluster Computing and Grid Computing: Leveraging Commodity Hardware

The early 2000s saw the widespread adoption of cluster computing. This approach leveraged commodity hardware – standard servers and networking components – to build powerful supercomputing systems. The concept was simple: thousands of inexpensive off-the-shelf computers, often running Linux, could be networked together to form a “Beowulf cluster.” This significantly reduced the cost of entry for building large-scale computational resources. Following this, Grid Computing emerged, aiming to create virtual supercomputers by pooling geographically dispersed computational resources across different organizations. Projects like the Large Hadron Collider’s LHC Computing Grid exemplify this model, allowing researchers worldwide to share and access vast computational power and data storage. These developments pushed HPC into a more distributed and accessible realm.

E. Hybrid Architectures and Heterogeneous Computing: The Modern Era

Today’s HPC landscape is dominated by hybrid architectures or heterogeneous computing. This involves combining different types of processing units within the same system to optimize for various computational tasks. Graphics Processing Units (GPUs), originally designed for rendering complex graphics, have become indispensable in HPC due to their highly parallel architecture, making them exceptionally efficient for tasks like machine learning, molecular dynamics, and cryptanalysis. Field-Programmable Gate Arrays (FPGAs) and custom ASICs are also gaining traction for specific, highly specialized accelerators. The top supercomputers in the world, such as Frontier, Fugaku, and Aurora, extensively use GPUs and other accelerators alongside traditional CPUs, representing a paradigm shift where parallel accelerators often provide the majority of the system’s floating-point performance. This diversification of processing elements is key to achieving exascale (a quintillion operations per second) and beyond.

Fundamental Components and Architecture of HPC Systems

Understanding how HPC systems achieve their extraordinary computational power requires a look at their core components and the way they are architected to work in concert.

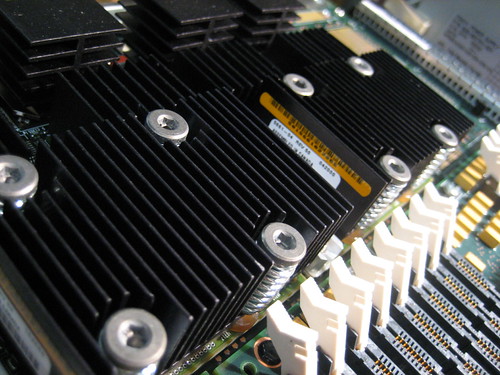

A. Compute Nodes: The Workhorses

The fundamental building blocks of any HPC system are its compute nodes. Each node is essentially a powerful server containing one or more Central Processing Units (CPUs) – often high-core count server-grade processors like Intel Xeon or AMD EPYC. Modern nodes also frequently include one or more accelerators, most commonly Graphics Processing Units (GPUs) from NVIDIA (e.g., A100, H100) or AMD (e.g., Instinct MI series), which provide massive parallel processing capabilities for specific types of workloads. Each node also has its own dedicated memory (RAM) and sometimes local storage. The sheer number of these nodes, ranging from hundreds to tens of thousands in a single supercomputer, is what enables the immense scale of HPC.

B. Interconnect Network: The Communication Backbone

The performance of an HPC system is critically dependent on its interconnect network, which serves as the communication backbone between thousands of compute nodes. Unlike standard Ethernet, HPC networks are designed for extremely high bandwidth and, crucially, very low latency. Technologies like InfiniBand (a dominant choice) and Slingshot (HPE Cray’s proprietary interconnect) enable rapid data exchange between nodes, minimizing communication bottlenecks that can cripple parallel applications. The network topology (e.g., fat-tree, torus) is also meticulously designed to ensure efficient data flow, especially for applications that require frequent communication between many nodes. A slow interconnect can negate the benefits of fast processors.

C. Storage Systems: Managing Massive Datasets

HPC workloads are inherently data-intensive, generating and consuming petabytes, even exabytes, of information. Therefore, robust and high-performance storage systems are paramount. These are typically organized in a hierarchical manner:

- High-Performance Parallel File Systems (PFS): Systems like Lustre and GPFS (IBM Spectrum Scale) are designed to serve data to thousands of compute nodes concurrently at very high speeds. They aggregate the I/O (input/output) bandwidth of many storage devices, presenting a single, massive file system image to the entire cluster.

- Burst Buffers: These are high-speed, non-volatile memory (NVM) based storage layers (e.g., NVMe SSDs) strategically placed between the compute nodes and the main parallel file system. They act as a temporary, ultra-fast buffer to absorb bursts of I/O, allowing applications to write data very quickly and then have it asynchronously moved to slower, higher-capacity storage.

- Archival Storage: For long-term preservation of massive datasets and simulation results, tape libraries or object storage systems are used, offering enormous capacity at a lower cost, though with slower access times.

D. Cooling and Power Infrastructure: Sustaining the Beasts

The immense computational power of HPC systems generates an incredible amount of heat, requiring sophisticated cooling systems. Liquid cooling, often involving direct-to-chip cooling or immersion cooling, is increasingly prevalent in modern supercomputers to efficiently dissipate heat and improve energy efficiency. Equally critical is the power infrastructure, which must deliver megawatts of stable electricity to the system, often requiring dedicated substations and redundant power supplies to ensure continuous operation. The power consumed by a single supercomputer can rival that of a small town.

E. Software Stack: Orchestrating Complexity

The hardware prowess of HPC is unleashed by a complex and specialized software stack:

- Operating System: Typically a flavor of Linux (e.g., RHEL, SUSE Linux Enterprise, Cray Linux Environment) optimized for large-scale parallel processing.

- Resource Managers/Job Schedulers: Tools like Slurm, PBS Pro, or LSF manage the allocation of computational resources (nodes, CPUs, GPUs) to various user jobs, ensuring fair sharing and efficient utilization of the expensive hardware.

- Parallel Programming Models:

- MPI (Message Passing Interface): The de facto standard for inter-process communication in distributed memory systems, allowing processes on different nodes to exchange data.

- OpenMP (Open Multi-Processing): Used for shared-memory parallelism within a single compute node (e.g., utilizing multiple CPU cores).

- CUDA/OpenCL/HIP: Programming models specifically for programming GPUs and other accelerators, enabling developers to harness their massive parallel capabilities.

- Optimized Compilers and Libraries: Specialized compilers (e.g., Intel Fortran/C++, GCC, NVIDIA HPC SDK) and highly optimized scientific libraries (e.g., BLAS, LAPACK, FFTW, cuDNN) are crucial for extracting maximum performance from the underlying hardware, often providing pre-optimized routines for common mathematical operations.

Transformative Applications and Scientific Breakthroughs Driven by HPC

HPC is not an abstract concept; it is the engine behind some of the most profound scientific discoveries and technological advancements of our time. Its applications span virtually every scientific and engineering discipline.

A. Climate Modeling and Environmental Science

HPC plays a pivotal role in climate modeling and environmental science. Sophisticated climate models, running on the world’s most powerful supercomputers, simulate the Earth’s complex climate system, integrating atmospheric, oceanic, land surface, and cryospheric processes. These models are crucial for:

- Predicting Future Climate Change: Generating long-term climate projections under various emissions scenarios, informing policy decisions on global warming.

- Understanding Extreme Weather Events: Simulating the dynamics of hurricanes, tornadoes, and floods with higher resolution, leading to improved early warning systems and disaster preparedness.

- Oceanography and Pollution Studies: Modeling ocean currents, sea level rise, and the dispersion of pollutants, aiding in marine conservation and environmental protection. These simulations require immense computational power due to the sheer number of variables, the resolution required, and the long time scales involved.

B. Computational Fluid Dynamics (CFD) and Aerodynamics

Computational Fluid Dynamics (CFD) is a cornerstone application of HPC, essential for designing anything that moves through a fluid (air or liquid). From optimizing the aerodynamics of Formula 1 cars and commercial aircraft to designing more efficient wind turbines and predicting blood flow in arteries, CFD simulations on supercomputers are indispensable. They allow engineers to:

- Optimize Aircraft and Automotive Design: Testing virtual prototypes to minimize drag, improve fuel efficiency, and enhance safety without costly physical wind tunnel experiments.

- Turbomachinery Design: Improving the efficiency of jet engines, gas turbines, and pumps by simulating complex airflow and heat transfer.

- Weather Prediction: CFD models are fundamental to numerical weather prediction, simulating atmospheric flows and dynamics at regional and global scales. The resolution and accuracy of these predictions directly correlate with the computational power available.

C. Materials Science and Engineering

HPC is revolutionizing materials science and engineering by enabling scientists to design and discover new materials with tailored properties at the atomic and molecular level. This involves:

- Ab Initio Calculations: Simulating the quantum mechanical behavior of electrons and atoms to predict material properties from first principles, without empirical data. This is crucial for developing new semiconductors, superconductors, and catalysts.

- Molecular Dynamics Simulations: Tracking the motion of individual atoms and molecules over time to understand material behavior under various conditions (temperature, pressure), leading to insights for drug discovery, polymer design, and nanotechnology.

- Battery Technology: Designing and optimizing new battery chemistries with higher energy density and faster charging capabilities through detailed atomic-level simulations.

D. Life Sciences and Genomics

The impact of HPC on life sciences and genomics is profound, fundamentally transforming our understanding of biology and disease.

- Genomics and Proteomics: Analyzing vast amounts of genetic sequencing data (e.g., from the Human Genome Project to individual patient genomes) to identify disease markers, understand genetic predispositions, and develop personalized medicine. This includes large-scale sequence alignment and variant calling.

- Drug Discovery and Design: Simulating molecular interactions between potential drug compounds and target proteins to identify promising candidates, optimize their efficacy, and predict side effects, dramatically accelerating the drug development pipeline.

- Protein Folding: Tackling the grand challenge of predicting a protein’s 3D structure from its amino acid sequence, crucial for understanding biological function and designing new therapies. Projects like AlphaFold, leveraging advanced AI on HPC systems, have made significant breakthroughs here.

- Computational Neuroscience: Simulating complex neural networks in the brain to understand cognitive functions, neurological disorders, and develop advanced AI.

E. Astrophysics and Cosmology

For astrophysics and cosmology, HPC is the only tool capable of simulating the universe’s grandest phenomena.

- Galaxy Formation and Evolution: Simulating the gravitational collapse of matter, star formation, and the merging of galaxies over cosmic timescales to understand how the universe evolved.

- Black Hole Mergers and Gravitational Waves: Modeling the extreme physics of black hole collisions and neutron star mergers, which produce the gravitational waves detected by observatories like LIGO.

- Supernova Explosions: Simulating the violent death of massive stars, providing insights into element synthesis and the origins of cosmic rays. These simulations demand immense computational grids and time steps.

F. Financial Modeling and Risk Analysis

Beyond traditional science, HPC is increasingly vital in financial modeling and risk analysis. High-frequency trading, complex derivative pricing, and portfolio optimization all rely on rapidly executing sophisticated algorithms. Banks and financial institutions use HPC to:

- Monte Carlo Simulations: Running millions of scenarios to assess risk for complex financial instruments or entire portfolios.

- Algorithmic Trading: Developing and backtesting high-frequency trading strategies that require near-real-time data processing and decision-making.

- Fraud Detection: Analyzing vast transaction datasets to identify suspicious patterns and prevent financial crime.

G. Artificial Intelligence and Machine Learning

The explosion of Artificial Intelligence (AI) and Machine Learning (ML), particularly deep learning, is inextricably linked to HPC. Training large-scale neural networks for tasks like image recognition, natural language processing, and autonomous driving requires enormous computational power. GPUs, with their highly parallel architectures, are the workhorses of AI training. HPC provides the necessary infrastructure for:

- Training Large Language Models (LLMs): Developing models like GPT-3/4, which require hundreds or thousands of GPUs running for weeks or months.

- Computer Vision: Training models for object detection, facial recognition, and medical image analysis.

- Drug Discovery with AI: Combining AI with molecular simulations to accelerate the search for new drugs.

- Scientific AI: Using AI to discover new physics, chemistry, or materials by learning from simulation data or experimental results.

The Challenges and Future Directions of HPC

Despite its remarkable successes, HPC faces persistent challenges that drive continuous innovation. Overcoming these hurdles is crucial for reaching the next frontiers of computational power and scientific discovery.

A. Power Consumption and Energy Efficiency

One of the most pressing challenges for supercomputers is power consumption and energy efficiency. As systems scale to exascale and beyond, the electricity required to power and cool them becomes astronomical, incurring massive operational costs and raising environmental concerns. Researchers are exploring:

- More Energy-Efficient Architectures: Designing processors and accelerators (like those using custom ASICs or neuromorphic computing) that perform more computations per watt.

- Advanced Cooling Technologies: Implementing direct liquid cooling, immersion cooling, and even cryogenic cooling to improve heat dissipation efficiency.

- Software Optimization: Developing more energy-aware algorithms and programming models that minimize power draw while maintaining performance. The goal is to maximize performance per Watt.

B. Data Movement and Storage Bottlenecks

The sheer volume of data generated and processed by HPC applications creates significant data movement and storage bottlenecks. Moving data between compute nodes, memory layers, and persistent storage can become the dominant factor limiting application performance, even more so than the computational speed of processors. Future HPC systems will need:

- Closer Memory-Processor Integration: Technologies like High-Bandwidth Memory (HBM) and Compute Express Link (CXL) aim to bring memory closer to the processor, reducing latency and increasing bandwidth.

- Intelligent Data Tiering: Automated systems that move data efficiently between ultra-fast burst buffers, parallel file systems, and archival storage based on access patterns.

- In-Situ Processing: Performing analysis or reduction of data as it is generated, minimizing the need to write massive amounts of raw data to storage.

C. Programming Model Complexity and Portability

Programming HPC systems remains a highly specialized skill due to the complexity of parallel programming models and heterogeneous architectures. Developers need to manage parallelism across multiple CPUs, GPUs, and potentially other accelerators, often using different programming paradigms. This leads to challenges in:

- Code Portability: Writing code that performs optimally across different HPC architectures (e.g., NVIDIA GPUs vs. AMD GPUs vs. Intel CPUs) without extensive re-writing.

- Ease of Use: Simplifying the development process for scientists and engineers who are experts in their domain but not necessarily in parallel programming. Efforts like SYCL, OpenACC, and unified memory models aim to abstract some of this complexity.

- Debugging and Performance Tuning: Identifying performance bottlenecks and debugging errors in highly parallel, distributed systems is notoriously difficult.

D. Artificial Intelligence Integration

The symbiotic relationship between HPC and AI is rapidly deepening. Future HPC systems will be designed from the ground up to accelerate AI workloads, while AI itself will increasingly be used to optimize HPC operations. This includes:

- AI for HPC System Management: Using machine learning to predict hardware failures, optimize job scheduling, and manage cooling systems more efficiently.

- AI-Driven Scientific Discovery: Employing AI to analyze massive simulation outputs, guide adaptive simulations (e.g., dynamically changing resolution where interesting phenomena occur), and even discover new scientific laws from data.

- Converged AI/HPC Workflows: Tightly integrating AI training and inference into traditional scientific simulation pipelines, allowing for real-time model steering or intelligent data reduction.

E. Quantum Computing’s Influence

While still in its nascent stages, quantum computing represents a potential long-term disruptor to classical HPC for certain classes of problems. Although not a replacement for classical supercomputers, quantum computers might offer exponential speedups for specific tasks like drug discovery (molecular simulation), materials science, and optimization problems that are intractable for even the most powerful classical HPC systems. The future might see hybrid quantum-classical HPC systems where classical supercomputers handle the bulk of computation, offloading specific, intractable sub-problems to quantum accelerators.

Conclusion

High Performance Computing is far more than just powerful hardware; it is an indispensable intellectual tool that magnifies human intellect, enabling us to ask and answer questions of unprecedented scale and complexity. It serves as the vital bridge between theoretical science and practical application, accelerating the pace of discovery across virtually every scientific and engineering discipline. From unraveling the mysteries of the cosmos and predicting the intricate dynamics of Earth’s climate to designing life-saving drugs and forging new materials at the atomic level, HPC is continually pushing the boundaries of what is computationally possible.

The ongoing challenges of power consumption, data movement, and programming complexity are not roadblocks but rather catalysts for innovation, driving the development of even more efficient architectures, smarter software, and integrated AI capabilities. As we stand on the cusp of the exascale era and look towards zettascale and beyond, HPC will remain at the forefront, not just accelerating computations, but fundamentally transforming the very methodology of scientific research. It is the powerhouse that translates raw data into profound insights, turning complex models into predictive power, and ultimately, transforming abstract scientific questions into tangible breakthroughs that shape our understanding of the universe and improve the quality of human life. The horizon of discovery, powered by HPC, continues to unfold with boundless potential.