In today’s fast-paced digital landscape, the ability to deploy, manage, and scale applications efficiently is paramount for businesses of all sizes. The advent of containerization revolutionized how software is packaged and run, offering unparalleled consistency and portability. However, managing a multitude of containers across various environments quickly becomes complex. This is where container orchestration steps in, transforming the chaotic landscape of individual containers into a streamlined, automated, and highly efficient system.

The Rise of Containerization: A Foundation for Orchestration

Before diving deep into orchestration, it’s crucial to understand the fundamental shift brought about by containerization. Traditional application deployment often involved installing software directly onto physical or virtual machines. This method was plagued by dependency conflicts, “it works on my machine” syndromes, and the challenge of replicating environments consistently.

Containers, such as those powered by Docker, encapsulate an application and all its dependencies—libraries, frameworks, and configuration files—into a single, lightweight, and isolated unit. This isolation ensures that an application runs consistently, regardless of the underlying infrastructure.

A. Portability: Containers can run virtually anywhere – on a developer’s laptop, an on-premise server, or in the cloud – without modification. This “write once, run anywhere” philosophy dramatically simplifies development and deployment pipelines. B. Consistency: Because containers package everything needed for an application to run, they eliminate environmental discrepancies that often lead to bugs and deployment failures. C. Isolation: Each container operates in its own isolated environment, preventing conflicts between different applications or services running on the same host. This enhances security and stability. D. Resource Efficiency: Containers share the host operating system’s kernel, making them significantly lighter than traditional virtual machines, which each require their own OS instance. This allows for higher density of applications on a single server, optimizing resource utilization. E. Faster Startup Times: Unlike virtual machines that need to boot a full operating system, containers start up almost instantly, leading to quicker development cycles and more responsive applications.

While containers provide these immense benefits, managing even a modest number of them manually becomes a daunting task. Imagine a microservices architecture with dozens or hundreds of independent services, each potentially requiring multiple instances for high availability and scalability. This is precisely the challenge that container orchestration addresses.

What Exactly is Container Orchestration?

At its core, container orchestration is the automated management, deployment, scaling, and networking of containers. It transforms individual, isolated containers into a cohesive, highly available, and resilient application ecosystem. Think of it as the conductor of an orchestra, ensuring that each instrument (container) plays its part at the right time, in harmony with the others, to produce a beautiful symphony (a functioning application).

An orchestration platform automates critical operational tasks, including:

A. Deployment and Scheduling: It intelligently places containers onto available hosts based on resource requirements, constraints, and desired state. This ensures efficient utilization of your infrastructure. B. Service Discovery: As containers are spun up or down, the orchestration system automatically registers and deregisters them, allowing different services to find and communicate with each other seamlessly. C. Load Balancing: It distributes incoming traffic across multiple instances of a service, preventing bottlenecks and ensuring optimal performance and availability. D. Scaling (Scaling Up and Down): Based on predefined rules or real-time metrics (e.g., CPU utilization, memory usage), the orchestrator can automatically add more instances of a container (scale up) or remove unnecessary ones (scale down) to meet demand, optimizing resource consumption. E. Self-Healing and High Availability: If a container or even an entire host fails, the orchestration system automatically detects the failure and replaces the affected containers on healthy nodes, ensuring continuous service availability. This is a critical feature for mission-critical applications. F. Configuration Management: It manages environment variables, secrets (passwords, API keys), and other configuration data, injecting them securely into containers at runtime. This centralizes configuration and reduces errors. G. Rolling Updates and Rollbacks: Orchestration platforms enable seamless updates to applications with zero downtime. They can gradually replace old versions of containers with new ones, monitoring the health of the new deployments. If issues arise, they can automatically roll back to the previous stable version. H. Resource Monitoring and Logging: While not always built-in, orchestration platforms often integrate with monitoring and logging solutions to provide insights into container performance and health, crucial for troubleshooting and performance optimization.

Key Players in the Container Orchestration Landscape

Several powerful tools dominate the container orchestration space, each with its unique strengths and use cases. The most prominent among them are Kubernetes, Docker Swarm, and Apache Mesos.

A. Kubernetes: Undoubtedly the undisputed leader in container orchestration, Kubernetes (often abbreviated as K8s) is an open-source system originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF). Its immense popularity stems from its robust feature set, extensibility, and a vibrant community.

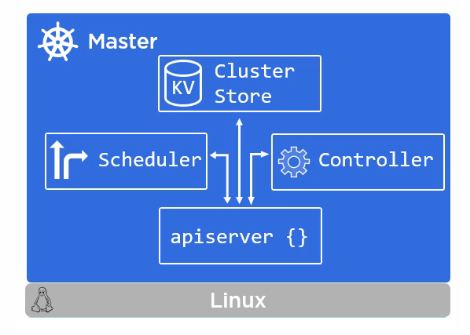

I. Architecture: Kubernetes operates with a master-node architecture. The master node (or control plane) manages the cluster, making decisions about scheduling and maintaining the desired state. Worker nodes (or minions) run the actual containerized applications. II. Core Concepts: 1. Pods: The smallest deployable unit in Kubernetes. A Pod is a group of one or more containers (and shared resources for those containers) that are deployed together on the same host. 2. Deployments: An abstraction that manages a set of identical Pods. Deployments enable declarative updates, rolling out new versions, and rolling back to previous ones. 3. Services: Provide a stable network endpoint for a set of Pods. Services allow other applications to communicate with your containers without needing to know their specific IP addresses, which can change frequently. 4. Ingress: Manages external access to services within the cluster, typically providing HTTP and HTTPS routing. 5. ConfigMaps and Secrets: Used to inject configuration data and sensitive information (like passwords) into containers securely. 6. Volumes: Provide persistent storage for containers, ensuring data is not lost when a container is restarted or moved. III. Strengths: Unparalleled features for complex deployments, highly extensible, massive community support, widely adopted across enterprises and cloud providers. IV. Weaknesses: Can have a steep learning curve due to its complexity and rich feature set; resource-intensive for very small deployments.

B. Docker Swarm: Docker Swarm is Docker’s native container orchestration solution. It’s simpler to set up and use than Kubernetes, making it an attractive option for users already familiar with the Docker ecosystem or for those with less complex orchestration needs.

I. Integration with Docker: Swarm is built directly into Docker Engine, meaning you can enable it with a single command (docker swarm init). This makes it incredibly easy to get started. II. Simplicity: Swarm aims for ease of use. Its commands are very similar to standard Docker commands, reducing the learning curve. III. Key Concepts: 1. Nodes: Machines participating in the Swarm, categorized as managers (for orchestration) and workers (for running containers). 2. Services: Define how containers should run, including the image to use, the number of replicas, and network settings. 3. Tasks: Individual containers created by a service. IV. Strengths: Excellent for simple to moderate deployments, low barrier to entry for Docker users, straightforward setup and management. V. Weaknesses: Less feature-rich than Kubernetes, not as widely adopted for very large or complex enterprise environments, smaller ecosystem.

C. Apache Mesos: While less focused purely on containers, Apache Mesos is a distributed systems kernel that can also manage containerized workloads. It’s a more generalized resource management platform that can run various distributed applications, including containers.

I. Resource Abstraction: Mesos abstracts CPU, memory, storage, and other compute resources away from machines, allowing distributed applications to consume these resources in a fine-grained manner. II. Frameworks: Mesos acts as a kernel and relies on “frameworks” (e.g., Marathon for long-running services, Chronos for cron jobs) to schedule tasks. III. Strengths: Highly scalable, capable of managing a wide variety of workloads beyond just containers, robust for very large-scale clusters. IV. Weaknesses: More complex to set up and manage than Kubernetes or Docker Swarm, less focused exclusively on container orchestration, a steeper learning curve.

The Benefits of Adopting Container Orchestration

Implementing a container orchestration strategy offers a multitude of advantages that directly impact operational efficiency, reliability, and development velocity.

A. Enhanced Scalability: Orchestration platforms allow you to effortlessly scale your applications up or down based on demand. This means you can handle traffic spikes without manual intervention and reduce infrastructure costs during periods of low usage. For e-commerce sites, this is invaluable during holiday sales; for media streaming, during peak viewing hours.

B. Improved Reliability and High Availability: By automatically replacing failed containers or entire nodes, orchestrators ensure that your applications remain available even in the face of hardware failures or software crashes. This self-healing capability is critical for maintaining customer satisfaction and preventing costly downtime.

C. Faster Deployment Cycles (CI/CD Integration): Container orchestration seamlessly integrates with Continuous Integration/Continuous Delivery (CI/CD) pipelines. Once a new version of an application is built and containerized, the orchestrator can automatically deploy it with rolling updates, minimizing or eliminating downtime. This speeds up release cycles and allows for more frequent feature delivery.

D. Optimized Resource Utilization: Orchestrators efficiently pack containers onto available infrastructure, maximizing the use of your compute, memory, and storage resources. This reduces wasted capacity and lowers operational costs, a significant benefit for cloud-based deployments where you pay for what you use.

E. Simplified Management of Complex Applications: For applications built on a microservices architecture, managing hundreds or thousands of interconnected containers can be overwhelming. Orchestration provides a centralized control plane, simplifying tasks like networking, service discovery, and configuration management across all services.

F. Standardization Across Environments: Containers ensure consistency, and orchestration extends this consistency to how applications are deployed and managed across development, testing, staging, and production environments. This reduces “it works on my machine” issues and streamlines the entire software lifecycle.

G. Disaster Recovery and Business Continuity: With orchestration, you can define your application’s desired state. In the event of a catastrophic failure, you can easily redeploy your entire application stack to a new region or cluster, significantly improving your disaster recovery capabilities.

H. Enhanced Security Posture: Orchestration platforms offer features like secret management, network policies, and role-based access control (RBAC), which help secure your containerized applications and infrastructure. Centralized configuration of security policies ensures consistency across your environment.

Implementing Container Orchestration: A Strategic Approach

Adopting container orchestration is a significant undertaking that requires careful planning and execution. Here’s a strategic approach to guide your implementation:

A. Assess Your Needs: I. Application Complexity: Are you running a few monolithic applications, or are you embracing a microservices architecture with many interconnected services? II. Scale: How many containers do you anticipate running? What are your expected traffic patterns and growth? III. Team Expertise: What is your team’s familiarity with Docker, Kubernetes, or other orchestration tools? IV. Infrastructure: Are you deploying on-premises, in the cloud, or a hybrid environment?

B. Choose the Right Orchestrator: I. For Small to Medium Deployments / Docker Users: Docker Swarm offers simplicity and ease of use. II. For Complex, Large-Scale, or Future-Proofing: Kubernetes is the industry standard, offering unparalleled flexibility and a vast ecosystem. Be prepared for a steeper learning curve. III. For Generalized Resource Management: Apache Mesos if your needs extend beyond just containers to other types of distributed workloads.

C. Design Your Architecture: I. Microservices vs. Monoliths: While orchestration benefits both, it truly shines with microservices, where managing many independent services is simplified. II. Networking: Plan your container networking strategy carefully, considering internal service communication and external access. III. Storage: Determine how you will handle persistent data for stateful applications. Kubernetes offers various storage options.

D. Develop a Containerization Strategy: I. Containerize Applications: Ensure your applications are properly containerized, creating efficient and secure Docker images. II. Registry: Set up a container registry (e.g., Docker Hub, Google Container Registry, AWS ECR) to store and manage your images.

E. Establish CI/CD Pipelines: I. Automate Builds: Integrate container image building into your CI pipeline. II. Automate Deployments: Configure your CD pipeline to automatically deploy new container images to your orchestration cluster. This is where rolling updates and rollbacks become invaluable.

F. Implement Monitoring and Logging: I. Centralized Logging: Aggregate logs from all your containers and orchestrator components into a central logging system (e.g., ELK stack, Splunk, Datadog). II. Performance Monitoring: Monitor key metrics like CPU, memory, network I/O, and application-specific metrics to ensure optimal performance and quickly identify issues. III. Alerting: Set up alerts for critical thresholds or anomalies to proactively address problems.

G. Focus on Security: I. Image Security: Scan container images for vulnerabilities before deployment. II. Network Policies: Implement strict network policies to control communication between containers. III. Secret Management: Use the orchestrator’s secret management capabilities (e.g., Kubernetes Secrets) to handle sensitive data securely. IV. RBAC: Implement Role-Based Access Control to ensure users and services only have the necessary permissions.

H. Train Your Team: I. Upskill Developers: Teach developers how to write container-native applications. II. Educate Operations: Train your operations team on managing and troubleshooting the chosen orchestration platform. III. Foster DevOps Culture: Encourage collaboration between development and operations teams to fully leverage the benefits of container orchestration.

The Future of Container Orchestration

The landscape of container orchestration is continuously evolving. We can expect several key trends to shape its future:

A. Further Abstraction and Managed Services: Cloud providers will continue to offer highly abstracted and managed Kubernetes services (e.g., GKE, EKS, AKS), reducing the operational burden on users and allowing them to focus purely on application development. B. Edge Computing Integration: As compute moves closer to the data source, container orchestration will play a crucial role in managing containerized applications deployed at the edge, supporting IoT and real-time processing. C. Serverless Integration: The lines between container orchestration and serverless computing will continue to blur. Platforms like Knative on Kubernetes enable serverless-like experiences on top of container infrastructure. D. AI/ML Workload Management: Orchestration platforms will become even more optimized for managing computationally intensive AI/ML workloads, providing specialized scheduling and resource allocation. E. Enhanced Security and Compliance: As containerized environments become more widespread, there will be increased focus on advanced security features, supply chain security, and compliance frameworks. F. WebAssembly (Wasm) as a Container Alternative: While nascent, WebAssembly is emerging as a potential alternative or complement to Docker containers for certain use cases, particularly for very lightweight, secure, and portable execution environments. Orchestration tools may need to adapt to manage Wasm modules alongside traditional containers.

Conclusion

Container orchestration is no longer just an industry buzzword; it’s an indispensable technology for modern software development and deployment. By automating the complex tasks of managing, scaling, and maintaining containerized applications, businesses can achieve unparalleled levels of efficiency, reliability, and agility. Whether you choose Kubernetes for its vast capabilities or Docker Swarm for its simplicity, embracing orchestration is a strategic imperative that will empower your teams, optimize your infrastructure, and ultimately drive your business forward in the cloud-native era. The initial investment in learning and implementation pays dividends through faster time-to-market, reduced operational overhead, and a more robust and resilient application landscape.