The landscape of technology is rapidly evolving, driven by the insatiable appetite of Artificial Intelligence (AI) for processing power and data. From complex machine learning models to real-time inferencing, AI workloads are pushing the boundaries of traditional computing infrastructure. This surge in demand necessitates a paradigm shift in how we design, deploy, and manage our data centers and cloud environments. Understanding these escalating requirements is crucial for businesses aiming to harness the full potential of AI.

The journey of an AI workload, from initial data ingestion to final model deployment, is a computationally intensive one. It begins with vast datasets, often measured in terabytes or even petabytes, which need to be stored, accessed, and processed with extreme efficiency. This initial phase, often called data preparation, involves cleaning, transforming, and augmenting raw data, a task that can be incredibly resource-heavy. Following this, the training phase commences, where AI models learn from this data. This is arguably the most demanding stage, requiring immense parallel processing capabilities, typically provided by Graphics Processing Units (GPUs) or specialized AI accelerators. Finally, inferencing, or the application of trained models to new data, can also be resource-intensive, especially for real-time applications.

The Foundation of AI: High-Performance Computing

At the heart of any robust AI infrastructure lies the concept of High-Performance Computing (HPC). HPC traditionally refers to the aggregation of computing power to achieve higher performance than a typical desktop computer or workstation. For AI, this translates into environments optimized for parallel processing, massive data throughput, and low latency.

Consider the following critical components of an HPC environment tailored for AI:

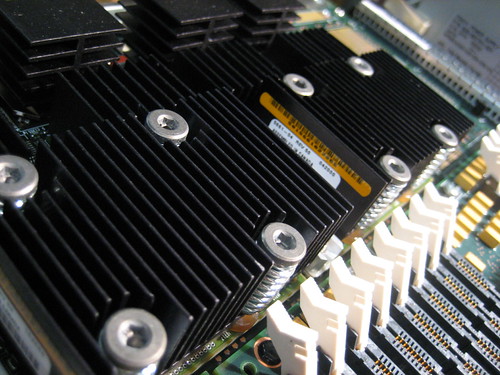

A. Accelerated Computing Hardware: * GPUs: While initially designed for rendering graphics, GPUs have become the de facto standard for AI training due to their highly parallel architecture, making them exceptionally efficient at the matrix multiplication operations central to neural networks. Modern data centers often deploy racks filled with hundreds or thousands of GPUs. * Tensor Processing Units (TPUs): Developed by Google, TPUs are application-specific integrated circuits (ASICs) custom-built for machine learning workloads. They offer superior performance and energy efficiency for certain types of AI computations, particularly within Google Cloud. * Field-Programmable Gate Arrays (FPGAs): FPGAs offer flexibility, allowing developers to customize their hardware for specific AI tasks. While not as universally adopted as GPUs for general AI, they find niches in specialized applications requiring extreme efficiency and low latency. * Neural Processing Units (NPUs): These are increasingly found in edge devices and dedicated AI hardware, designed for efficient inferencing at the edge, reducing reliance on cloud connectivity.

B. High-Speed Networking: The sheer volume of data being shuffled between GPUs, storage, and other compute nodes necessitates lightning-fast interconnects. Technologies like InfiniBand and Ethernet with Remote Direct Memory Access (RDMA) are crucial for minimizing latency and maximizing data transfer rates, preventing bottlenecks that can cripple AI training times. Without high-speed networking, even the most powerful GPUs can remain underutilized.

C. Massive Storage Solutions: AI models consume and generate enormous amounts of data. This demands storage solutions that are not only vast in capacity but also incredibly fast. * All-Flash Arrays (AFAs): Providing superior input/output operations per second (IOPS) and low latency, AFAs are ideal for hot data and frequently accessed datasets during model training. * Network-Attached Storage (NAS): For shared access and less frequently accessed data, high-performance NAS systems can be employed. * Object Storage: For archival purposes and highly scalable data lakes, object storage solutions like Amazon S3 or Google Cloud Storage offer cost-effective and durable options, though with higher latency than block or file storage. * Parallel File Systems: Solutions like Lustre or GPFS (now IBM Spectrum Scale) are designed to handle concurrent access from thousands of compute nodes, crucial for large-scale AI training.

Cloud vs. On-Premises: A Strategic Decision

The choice between building an on-premises AI infrastructure and leveraging cloud-based AI services is a strategic one, fraught with considerations regarding cost, control, scalability, and security.

- On-Premises Infrastructure: For organizations with stringent data sovereignty requirements, pre-existing data centers, or a need for absolute control over their hardware and software stack, on-premises solutions can be attractive. This approach demands significant upfront capital expenditure (CapEx) for hardware procurement, cooling, power, and highly skilled personnel for management and maintenance. However, it can offer lower operational costs (OpEx) over the long term for consistent, high-utilization workloads and predictable performance.

- Cloud-Based AI Services: The cloud offers unparalleled flexibility and scalability, allowing businesses to rapidly provision and de-provision AI infrastructure on demand. This translates to an OpEx model, where costs scale with usage, making it ideal for experimental workloads, fluctuating demands, or smaller organizations without the capital for large-scale hardware investments. Major cloud providers like AWS, Google Cloud, and Microsoft Azure offer a rich ecosystem of AI-specific services, including pre-trained models, managed machine learning platforms, and access to the latest GPU and TPU instances. This can significantly reduce time-to-market for AI initiatives. The “pay-as-you-go” model is highly appealing for many.

Many organizations adopt a hybrid approach, running core, stable AI workloads on-premises for cost efficiency and control, while leveraging the cloud for burst capacity, development, and access to cutting-edge services.

Optimizing for Performance and Efficiency

Beyond raw horsepower, optimizing AI infrastructure involves several key strategies to maximize performance and minimize operational costs:

A. Containerization and Orchestration: Technologies like Docker and Kubernetes are indispensable for managing complex AI workflows. * Containerization: Encapsulates AI applications and their dependencies into portable, isolated units, ensuring consistent execution across different environments. * Orchestration: Kubernetes automates the deployment, scaling, and management of containerized applications, enabling efficient resource utilization and simplified model deployment. It helps manage GPU allocation, ensures high availability, and facilitates horizontal scaling of AI workloads.

B. Data Governance and Pipelines: Effective data management is paramount. Establishing robust data pipelines ensures that high-quality data flows seamlessly from source to AI models. This includes data ingestion, cleaning, transformation, and secure storage. Data lakes and data warehouses are foundational for aggregating and organizing the vast datasets required for AI.

C. Model Management and MLOps: As AI models proliferate, managing their lifecycle becomes critical. Machine Learning Operations (MLOps) is a set of practices that aims to apply DevOps principles to machine learning, automating the deployment, monitoring, and retraining of AI models. This ensures models remain accurate and performant over time. Key aspects include: * Version Control for Models: Tracking changes to models and datasets. * Automated Testing and Validation: Ensuring model quality before deployment. * Continuous Integration/Continuous Deployment (CI/CD) for AI: Streamlining the delivery of new and updated models. * Model Monitoring: Tracking performance metrics and detecting drift in production.

D. Energy Efficiency and Cooling: The immense power consumption of AI hardware, particularly GPUs, generates significant heat. Designing and operating an energy-efficient infrastructure is not only environmentally responsible but also economically crucial. Advanced cooling solutions, such as liquid cooling (including direct-to-chip and immersion cooling), are becoming increasingly prevalent in AI-focused data centers to manage heat dissipation effectively and reduce power usage effectiveness (PUE).

E. Security Posture: AI infrastructure, handling sensitive data and critical algorithms, presents an attractive target for cyber threats. A robust security posture is non-negotiable. This includes: * Network Segmentation: Isolating AI workloads from other parts of the network. * Access Control: Implementing strict identity and access management (IAM) policies. * Data Encryption: Encrypting data at rest and in transit. * Vulnerability Management: Regularly patching and scanning for security vulnerabilities. * Threat Detection and Response: Employing advanced security analytics to detect and respond to threats in real-time.

The Future of AI Infrastructure

The demands of AI workloads will only continue to escalate, pushing innovation in several key areas:

- Specialized AI Processors: Expect to see continued proliferation of custom-designed AI chips optimized for specific neural network architectures and tasks, offering even greater performance and efficiency than general-purpose GPUs.

- Neuromorphic Computing: Inspired by the human brain, neuromorphic chips aim to process information in a fundamentally different way, potentially leading to ultra-low-power AI at the edge.

- Photonics and Optical Computing: Exploring the use of light for computation could revolutionize AI, offering unprecedented speed and energy efficiency.

- Edge AI Infrastructure: Pushing AI processing closer to the data source (e.g., in smart devices, autonomous vehicles, industrial IoT) reduces latency, conserves bandwidth, and enhances privacy. This requires robust, miniature, and power-efficient AI hardware at the network’s edge.

- Sustainability: As AI grows, so does its carbon footprint. Future infrastructure will prioritize even greater energy efficiency, leveraging renewable energy sources and advanced cooling techniques.

In conclusion, the era of AI is not merely about algorithms and models; it’s profoundly about the underlying infrastructure that powers them. Businesses that proactively invest in and optimize their compute, storage, and networking capabilities will be best positioned to innovate, scale, and gain a competitive edge in an increasingly AI-driven world. The strategic implementation of powerful, efficient, and secure infrastructure is no longer an option, but a fundamental necessity for unlocking the true potential of artificial intelligence.