The digital backbone of the modern global economy is no longer found in dusty basement server rooms but within the vast, invisible expanses of enterprise cloud infrastructure. As businesses scale at an unprecedented rate, the ability to fine-tune these virtual environments has become the ultimate competitive advantage for technical leaders. Effective optimization is not merely about cutting costs; it is about ensuring that every millisecond of latency is eliminated to provide a seamless user experience. We are currently witnessing a shift where infrastructure is treated as code, allowing for automated scaling and self-healing systems that react to traffic spikes in real-time.

However, without a strategic approach to resource allocation and network topology, even the most expensive cloud setups can become sluggish and inefficient. Navigating the complexities of multi-cloud environments, container orchestration, and serverless architectures requires a deep understanding of how hardware and software interact at scale. This comprehensive exploration will guide you through the technical pillars of high-performance cloud management, from CPU pinning to global load balancing. By the end of this deep dive, you will have a clear blueprint for transforming your current infrastructure into a lean, high-speed engine of innovation.

Understanding the Core Pillars of Cloud Efficiency

To optimize a complex cloud environment, one must first master the fundamental components that dictate how data moves and processes. Every layer of the stack offers an opportunity for a performance breakthrough.

A. Compute Resource Allocation

Selecting the right instance type is critical, as a memory-heavy task will stall on a compute-optimized node. You must balance the number of virtual CPU cores with the available RAM to prevent “throttling” during peak operations.

B. Storage Throughput and Latency

Not all cloud storage is created equal, and choosing between block, file, or object storage can make or break your database speed. High-performance NVMe-based drives are essential for applications that require thousands of input/output operations per second.

C. Network Topology and Bandwidth

How your virtual machines talk to each other determines the overall lag of your system. Using private links and placement groups can significantly reduce the “hop” distance between your application and your database.

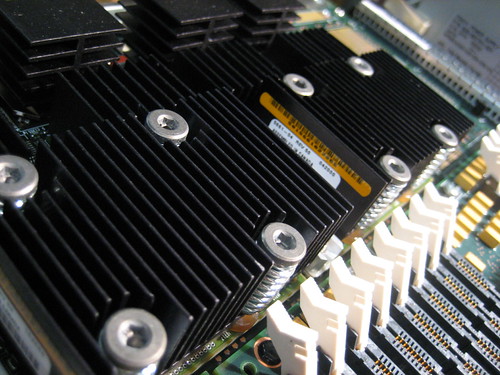

Advanced Virtualization and Containerization

The way we package and deploy applications has a direct impact on how the underlying server hardware performs. Modern enterprises are moving away from heavy virtual machines toward lightweight containers.

A. Kubernetes Orchestration and Scaling

Kubernetes allows you to manage thousands of containers with automated bin-packing. This ensures that your server hardware is utilized to its maximum potential without wasting a single megabyte of memory.

B. Microservices Communication Optimization

In a microservices world, the “service mesh” handles the traffic between different parts of your app. Optimizing this mesh reduces the overhead of constant network handshakes and encrypted data transfers.

C. Serverless Function Cold Starts

Serverless computing offers great scalability, but “cold starts” can introduce unwanted latency. Using “provisioned concurrency” ensures that your functions are warm and ready to execute the moment a user clicks a button.

Database Performance Tuning in the Cloud

The database is often the primary bottleneck in any enterprise infrastructure. Moving to the cloud requires a new set of rules for managing data flow and query execution.

A. Read Replicas and Global Distribution

By placing read-only copies of your database closer to your users, you can dramatically reduce load times. This offloads the pressure from your primary “writer” database, allowing it to focus on complex transactions.

B. In-Memory Caching Strategies

Tools like Redis act as a high-speed buffer between your app and your disk-based database. Caching frequently accessed data in the server’s RAM can improve response times by over ninety percent.

C. Auto-Indexing and Query Optimization

Cloud databases now feature AI-driven tools that suggest the best indexes for your specific data patterns. Cleaning up slow queries is the most cost-effective way to boost infrastructure performance.

Strategic Global Load Balancing

When your users are spread across the globe, a single server location will never be enough. You need a sophisticated traffic management system to direct users to the fastest possible path.

A. Anycast IP and DNS Steering

Using Anycast allows multiple servers to share a single IP address, directing users to the geographically closest data center. This minimizes the physical distance data must travel across underwater cables.

B. Content Delivery Network (CDN) Integration

A CDN caches your static assets, like images and videos, on “edge” servers located in almost every major city. This ensures that the heavy lifting is done far away from your expensive core infrastructure.

C. Application Load Balancer (ALB) Logic

ALBs work at the “application layer,” allowing them to make smart routing decisions based on the content of a request. They can detect healthy versus unhealthy servers and reroute traffic in milliseconds.

Monitoring and Observability for Real-Time Gains

You cannot optimize what you do not measure. Observability is the practice of looking deep inside your infrastructure to find hidden bottlenecks.

A. Distributed Tracing Across Services

Tracing allows you to follow a single user request as it travels through dozens of different microservices. This highlights exactly which service is causing a delay in the overall chain.

B. Infrastructure Metrics and Thresholds

Setting up alerts for CPU spikes, disk fullness, and memory leaks is the first line of defense. Real-time dashboards provide a “god view” of your entire digital empire.

C. Log Aggregation and Error Analysis

Centralizing your logs allows you to use machine learning to find patterns in system failures. This “AIOps” approach can predict a hardware failure before it actually happens.

Security Without Sacrificing Speed

Many security protocols introduce latency, but modern enterprise cloud setups utilize hardware-accelerated encryption to keep things fast.

A. Zero Trust Network Access (ZTNA)

Instead of a slow VPN, ZTNA verifies every user and device without creating a bottleneck. This “identity-aware” proxy ensures security happens at the edge of the network.

B. Hardware Security Modules (HSM)

Using dedicated hardware for encryption keys speeds up the “handshake” process for secure connections. This offloads the heavy math from your main CPU, freeing it up for application tasks.

C. DDoS Protection and Scrubbing

Enterprise cloud providers offer massive “scrubbing” centers that filter out malicious traffic before it hits your servers. This ensures your legitimate users are never slowed down by a cyber attack.

Cost Optimization as a Performance Metric

Inefficient spending usually indicates an inefficient technical setup. Performance and cost are two sides of the same coin in the cloud world.

A. Right-Sizing and Orphaned Resource Cleanup

Many companies pay for giant servers that are only using ten percent of their power. Automated tools can “right-size” these instances to match their actual workload perfectly.

B. Spot Instance Integration for Batch Jobs

For tasks that aren’t time-sensitive, using “spot” capacity can save up to ninety percent on compute costs. This allows you to run massive data processing jobs without blowing your budget.

C. Reserved Instances and Savings Plans

By committing to a certain amount of usage, you can secure much faster hardware for a lower price. This financial stability allows you to invest more into high-performance infrastructure upgrades.

The Role of Edge Computing in the Enterprise

We are moving past the “centralized cloud” into a “distributed edge” model. This brings the processing power as close to the end-user as possible.

A. IoT Gateways and Local Processing

For companies with physical sensors, processing data at the “edge” reduces the amount of noise sent to the cloud. Only the most important insights are sent back to the main data center.

B. 5G and Low-Latency Mobile Apps

As 5G networks expand, the infrastructure must be ready to handle millisecond-level responses. Edge servers allow for real-time AR and VR applications that would be impossible with a traditional setup.

C. Edge AI and Local Inference

Running AI models directly on edge servers allows for instant facial recognition or voice processing. This removes the “round-trip” time to a distant cloud server.

Disaster Recovery and High Availability

A high-performance system is a system that never goes down. Scalable infrastructure must be designed for “failure as a standard.”

A. Multi-Region Redundancy

If an entire geographic region goes offline, your infrastructure should automatically flip to a backup region. This requires real-time data synchronization across thousands of miles.

B. Automated Failover Protocols

Failover should never be manual. Health checks should trigger the “promotion” of a backup database to the primary role the moment an issue is detected.

C. Chaos Engineering and Stress Testing

To ensure performance under pressure, you must intentionally break your own systems. Testing how your infrastructure reacts to a simulated server crash is the only way to guarantee resilience.

Conclusion

Optimizing enterprise cloud infrastructure is a continuous journey of technical refinement. The foundation of success lies in the perfect alignment of compute and storage resources. Virtualization through containers ensures that every cycle of your processor is used effectively. Global traffic management is essential for providing a fast experience to a distributed audience. Observability tools provide the data necessary to find and fix hidden performance bottlenecks.

Security must be integrated at the hardware level to avoid slowing down the user experience. Scaling your infrastructure should be an automated process driven by real-time demand. Cost management is a direct reflection of how efficiently your digital resources are utilized. Edge computing is the next frontier for reducing latency in highly interactive applications. Database tuning remains the most impactful way to improve overall application speed.

Resilience is a performance metric because downtime is the ultimate form of high latency. The move toward serverless architecture simplifies scaling but requires careful cold-start management. Modern enterprises must treat their infrastructure as code to maintain agility and speed. Artificial intelligence is becoming the primary tool for managing complex cloud environments. The ultimate goal of optimization is to make the technology invisible to the end-user. Strategic infrastructure investment today creates the scalable platform for tomorrow’s growth.