The rapid proliferation of Artificial Intelligence (AI) infrastructure has created a critical, yet often overlooked, new cyber security risk: exposed AI servers. Recent warnings from leading cyber security experts indicate that thousands of AI deployment servers—including those hosting vector databases, machine learning frameworks, and large language model (LLM) components—are publicly accessible on the internet with little to no authentication.

This massive oversight represents an unprecedented threat, exposing vast quantities of proprietary models, confidential training data, and sensitive API keys to exploitation by malicious actors. This comprehensive analysis dives deep into the technical vulnerabilities, the catastrophic risks of AI data poisoning and model theft, and the immediate, high-priority steps organizations must take to secure the new AI attack surface. This content is meticulously crafted for maximum SEO value targeting high-CPC keywords in the AI and cyber security sectors.

I. The Scale of the Crisis: Thousands of Exposed AI Systems

The current security challenge stems from the speed of AI development outpacing the implementation of robust security controls. Developers, often in a rush to deploy innovative AI features, inadvertently leave critical components unprotected, assuming internal network safeguards are sufficient.

A. The Exposed Components and Vulnerable Platforms

Security research has identified specific components commonly deployed within the AI ecosystem that are being left exposed, making them prime targets for unauthorized access. The sheer numbers reported underscore a systemic failure in secure deployment practices.

A. Vector Databases (e.g., ChromaDB, Redis): These databases are crucial for modern LLM applications, as they store the specialized knowledge (embeddings) used in Retrieval-Augmented Generation (RAG). They contain the specific data the AI uses to provide informed answers, which is often highly sensitive. Reports indicate hundreds of such servers are exposed, lacking basic authentication.

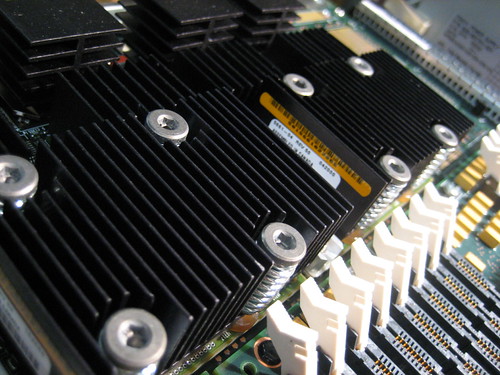

B. Model Serving Frameworks (e.g., Ollama, NVIDIA Triton): These platforms are used to deploy and manage AI models in production. Leaving them exposed grants attackers potential direct access to the model weights and configuration, allowing for model theft or remote code execution (RCE) on the host server. Thousands of servers utilizing these frameworks have been found vulnerable.

C. Misconfigured APIs and Access Controls: The foundation of most AI systems relies on APIs to connect the model, the data, and the application layer. Misconfigured APIs or weak default credentials create an easy path for attackers to dump API keys and authentication tokens, allowing them to impersonate legitimate services and escalate privileges across the network.

B. The Root Causes of Exposure

The systemic security flaws are not due to zero-day exploits alone but primarily stem from basic, preventable configuration mistakes that are amplified by the complexity of modern AI stacks.

A. Rushed Deployment and Development Timelines: The intense pressure to deliver Generative AI features quickly means security is often relegated to a final, hurried step, rather than being security-by-design. This results in default settings being used, unnecessary ports being left open, and basic authentication being skipped.

B. Lack of AI-Specific Security Expertise: Traditional IT security teams are well-versed in securing standard network endpoints but often lack the specialized knowledge required for the AI attack surface. The security models for machine learning operations (MLOps) environments—including protecting training pipelines and model artifacts—are fundamentally different from traditional application security (AppSec).

C. Over-Reliance on Open-Source Components: The AI ecosystem heavily relies on open-source libraries and frameworks. While beneficial for innovation, these components often harbor known vulnerabilities or dependencies that are not properly patched or secured before being pushed to production. A single outdated component can compromise an entire system.

II. The Catastrophic Risks of Exposed AI Servers

The compromise of an AI server is not equivalent to a standard data breach; it opens the door to fundamentally new and more dangerous forms of cyber warfare that directly undermine the integrity of the AI model itself.

A. Data Poisoning and Model Integrity Attacks

When an attacker gains write access to an exposed training dataset or vector database, they can execute a data poisoning attack.

A. Input Manipulation for Malicious Outputs: Attackers inject subtly corrupted or malicious data into the model’s knowledge base. This forces the model to learn incorrect, biased, or harmful patterns, leading to compromised security or accuracy. For example, a financial fraud-detection AI could be “poisoned” to ignore specific, large-scale suspicious transactions.

B. Backdoor Insertion (Adversarial Attacks): This involves training the model to respond in a harmful or unexpected way only when a very specific, secret input (“trigger”) is presented. The model appears to function normally until the attacker uses the trigger to activate the hidden, malicious behavior—a highly effective method for sustained, covert exploitation.

B. Model Theft and Intellectual Property (IP) Loss

The proprietary AI model is the core intellectual property of the deploying organization, representing millions in R&D investment.

A. Extracting Model Weights and Parameters: Direct access to a serving server can allow an attacker to copy the entire model file, including its weights and parameters. This is model theft, which hands a competitor or nation-state adversary a fully trained, high-value asset without the cost of development.

B. Model Inversion and Exfiltration: Even without direct server access, attackers can exploit exposed APIs to send continuous, carefully crafted queries. By analyzing the model’s outputs, they can model invert or model extract the underlying training data and structure, essentially creating an identical shadow model.

C. Regulatory, Ethical, and Compliance Breaches

The exposure of AI systems carries steep legal and ethical consequences, particularly concerning the misuse of personal and regulated data.

A. Leakage of Sensitive Training Data: AI models are often trained on vast quantities of sensitive data, including Personally Identifiable Information (PII), health records, or proprietary corporate documents. If the training data is exposed or if the model can be tricked into regenerating it (data leakage), the organization faces massive fines under regulations like GDPR, CCPA, and HIPAA.

B. Algorithmic Bias and Discrimination: If exposed or tampered models are deployed in critical functions (e.g., loan approvals, hiring, or judicial risk assessment), the resultant biased decisions can lead to algorithmic discrimination. The legal and reputational damage from a system that is provably unfair is immense.

C. Copyright and IP Infringement Risks: Many LLMs are trained on scraped internet data, including copyrighted material. An exposed model that accidentally or intentionally reproduces this material can trigger costly copyright infringement lawsuits against the deploying organization.

III. The New Security Paradigm: MLOps and DevSecOps Integration

Addressing this crisis requires a fundamental shift from traditional IT security to a framework integrated into the entire Machine Learning Operations (MLOps) lifecycle, emphasizing DevSecOps principles. Security must be embedded from the initial data preparation stage through to model deployment.

A. Securing the Development and Training Pipeline

The most critical points of vulnerability are often the earliest: the data and the initial model design.

A. Training Data Provenance and Sanitization: All training data must be rigorously validated and authenticated. Input sanitization and guardrails are essential to filter out any malicious or corrupt inputs before they reach the model. Organizations must maintain a clear data lineage to track where every piece of training information originated.

- B. Privacy-Preserving AI (PPAI) Techniques: To protect sensitive data even within a secure environment, advanced techniques must be adopted:

- Differential Privacy: Introducing controlled ‘noise’ to the data to prevent any single point of information from being identifiable while maintaining statistical integrity.

- Homomorphic Encryption: Allowing computations on encrypted data without ever decrypting it, ensuring the data remains protected even during processing.

- Federated Learning: Training models across multiple decentralized devices or servers without ever sharing the raw data.

- C. Secure Configuration Management: Strict rules must govern the configuration of all underlying infrastructure. This includes immediately changing all default passwords, enabling firewalls to restrict access to the bare minimum necessary ports, and ensuring all services are bound to internal, non-public IP addresses.

B. Hardening the Production and Inference Environment

Once the model is deployed for real-time use (inference), the focus must shift to constant monitoring and API protection.

- A. Robust Access Control and Multi-Factor Authentication (MFA): No AI server or component should ever be accessible without Multi-Factor Authentication (MFA). Access should operate on the Principle of Least Privilege, meaning developers and services only have the permissions absolutely necessary for their function.

- B. API Gateways and Input Validation: All external interactions with the AI model must pass through a secure API Gateway. This acts as a centralized enforcement point for rate limiting, input validation, and preventing prompt-based attacks like Prompt Injection—the art of bypassing the model’s core instructions using malicious inputs.

- C. Real-Time Model Monitoring and Anomaly Detection: Continuous monitoring is non-negotiable. Systems must be in place to detect anomalous behavior, such as a sudden spike in model requests, unexpected model outputs, or attempts to access internal file systems. AI is used to secure AI: behavioral analytics can establish a baseline of normal model function and instantly flag deviations.

D. The Role of Regulatory Technology (RegTech)

The burden of compliance is increasing exponentially with AI. RegTech solutions leverage automation to handle the complexity.

A. Automated Compliance Audits: RegTech tools can automatically scan AI environments for policy violations, ensuring compliance with data residency and privacy rules, significantly reducing the manual effort of auditing.

B. Explainable AI (XAI) Documentation: Regulatory bodies increasingly demand Explainable AI (XAI)—the ability to articulate how a model arrived at a decision. RegTech solutions assist in generating clear, traceable logs and documentation required to meet regulatory standards, thus maintaining legal standing and public trust.

IV. A Call to Action for AI Security Leaders

The discovery of thousands of exposed AI servers is not a niche security story; it is a siren call for all technology-driven organizations. The integrity of corporate data, the trust of customers, and compliance with global law now depend on securing this new, complex computational frontier. AI Security must become a top-tier investment priority.

The future competitive advantage will not just belong to the companies that deploy the best AI, but to those that deploy the most secure and ethical AI. Ignoring the basic principles of secure configuration and DevSecOps in the haste to market is a gamble with corporate existence. The immediate, non-negotiable step is to scan your entire IT estate, identify all public-facing AI components, and implement a zero-trust model immediately.